Saul – The first family of open models for law

Jul 30, 2024

spotlight

Equall is developing frontier AI systems for legal work. Earlier this year, we released SaulLM, a state-of-the-art 7 billion (7b) parameter large language model (LLM) adapted uniquely for the legal domain — and the first open language model for law. Since then, we’ve continued to scale and develop Saul.

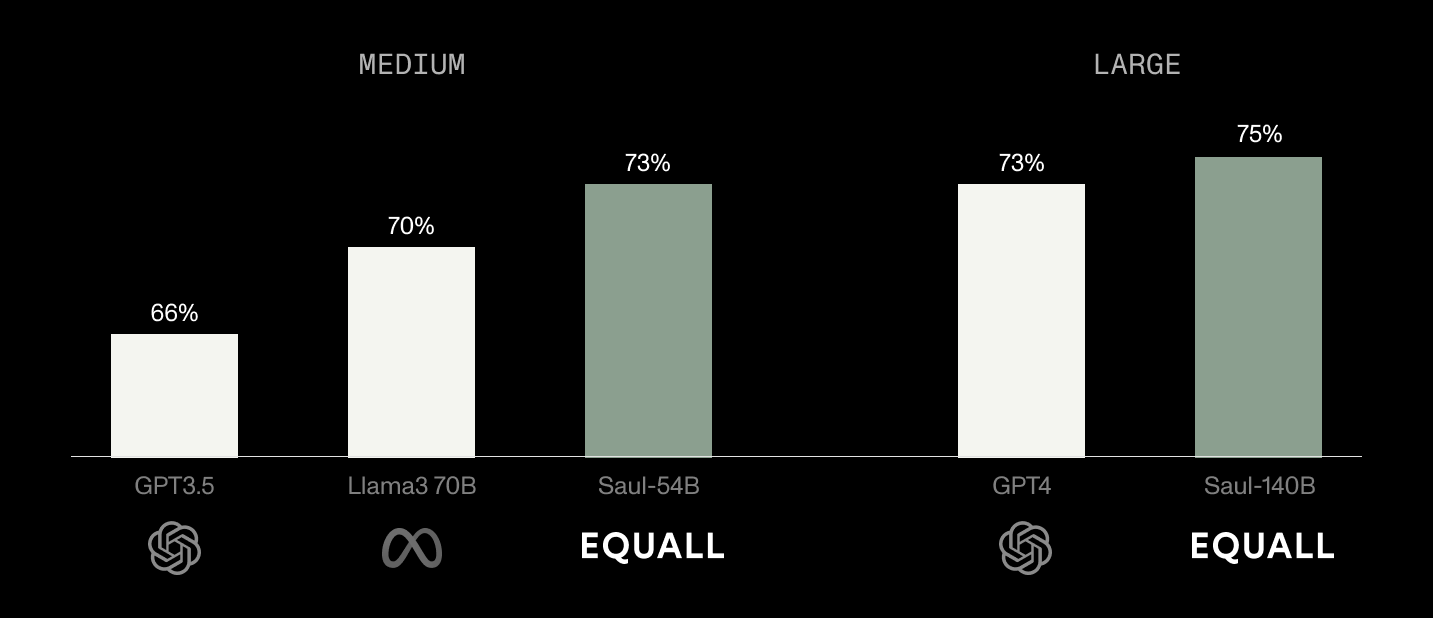

Today, we’re excited to introduce the Saul family of legal language models, now available in 54 billion (54b) and 141 billion (141b) sizes. Achieving state-of-the-art performance on legal reasoning benchmarks, Saul shows substantial performance gains over similarly sized models, and outperforms much larger commercial models, including GPT-4, across an array of legal tasks.

At Equall, we are committed to making legal intelligence broadly accessible. We are openly releasing the full family of Saul models — and sharing our research and training methodologies with the community — in an aim to spur further innovation, research, and value creation in the legal industry.

Introducing the Saul family

Saul is a family of language models specialized for legal reasoning. This family now includes three different sizes (7b, 54b, and 141b), each trained using a general-purpose Mistral LLM architecture. To maximize performance, we undertook an adaptation process that involved continued pretraining, instructing fine-tuning (IFT), and preference alignment using domain-specific optimization (DSO). You can read our latest technical report to learn more about our data composition and adaptation methodologies.

A few key details include:

In February of this year, we released Saul-7b, the first free and publicly available LLM for law. As part of our domain-adaptation process, Saul-7b was trained using a very large corpus (30 billion tokens, or roughly 15 million pages) of legal data.

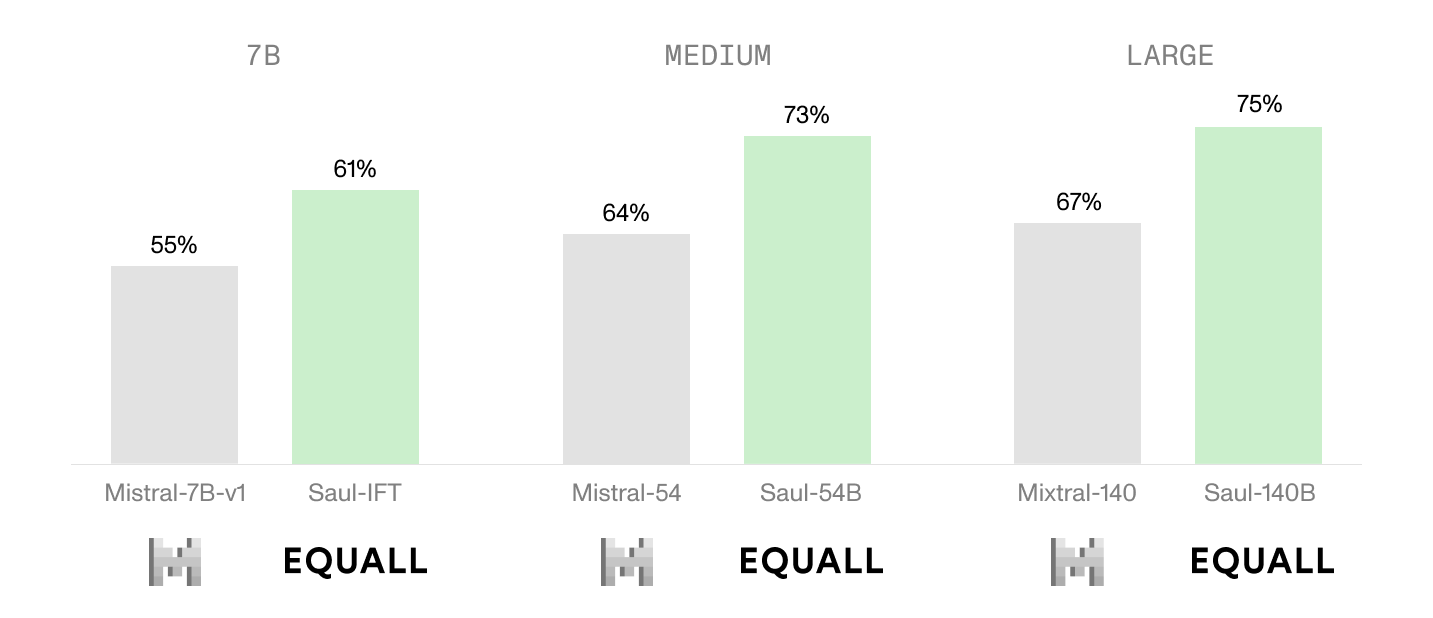

Our training process resulted in an average 6% performance gain over Mistral-7b across a range of legal tasks as measured by LegalBench, a leading benchmark used to evaluate legal reasoning in LLMs.

Leveraging insights from our initial training, we then trained Saul-54b and Saul-141b from an expanded and further curated pretraining corpus of legal text.

Each model was trained using AMD GPUs at ADASTRA, a French supercomputer renowned for its energy efficiency.

Today, we are releasing Saul-54b and Saul-141b, joining our initial 7b model to form the Saul family of open models for law. The following is a summary of our results and findings.

Distinguished legal performance at size

We measured the performance of Saul-54b and 141b using LegalBench Instruct, an updated version of LegalBench. This benchmark comprises over 150 tasks across six legal reasoning categories — issue-spotting, rule-recall, rule-application, rule-conclusion, interpretation, and rhetorical understanding. Notably, Saul-54b and 141b both surpassed similarly sized open and proprietary LLMs on LegalBench, and Saul-141b even outperformed OpenAI’s GPT-4, a vastly larger model, on average across the legal reasoning benchmark tasks.

While our training work is not complete, the results mark a significant achievement — and we hope that our research will light the path for further innovation.

Now, we are working to optimize the models for interactive experiences and other legal use cases through additional data integration, further fine-tuning, and enhanced user preference alignment.

Validating the promise of domain specialization

Our work with Saul further confirms that domain-specific models can surpass general-purpose systems for legal work. From deeper and more contextualized understanding, to increased accuracy and relevance, to expert-level insights, enhanced user experience and beyond — specialized models can meaningfully elevate the performance of AI in law. While the impact of specialized LLMs has already been well observed in several fields, like code, medicine and science, domain specialization in the legal industry has been less deeply explored. The SaulLM project aims to contribute to this discussion.

Saul reinforces that domain specialization offers breakthrough opportunities in law. Indeed, each of our domain-adapted Saul models showed marked improvement over the general-purpose base model across LegalBench’s legal reasoning tasks.

Due to the profound nuance and intricacies in legal practice, unlocking the full potential of AI in law requires the development of highly-specialized systems combining multiple models, diverse techniques and complex algorithms to service legal use-cases and replicate legal work processes. The Saul family is only our initial contribution; there is much work ahead.

What’s next?

The entire Saul family of models is now available on Equall’s HuggingFace page. In the spirit of fostering innovation and increasing access to legal resources, we are releasing the Saul models open for free and responsible use under the permissive MIT license. As we continue to develop and scale the models, we hope that our SaulLM project meaningfully contributes to the ongoing research and collaboration across the legal and NLP communities.

For questions about our model training research or our work in building intelligent workflow systems, please contact the Equall team at [email protected].